Making sense of the Linux logs

I wanted to write an article about a favorite tool of mine, fail2ban, but I felt I can’t just start writing about it without first covering the Linux logs. It’s like writing about printing without knowing about paper. So here we are: how do we wrap our head around the Linux logs?

First of all, Linux has an abundance of logs. You have of course the kernel logs, the boot logs and other system related logs, and then you have logs for each and every application your Linux distribution runs. If you run an application, you can be quite sure there is a log somewhere about it. And the best part? Linux logs usually follow a very strict pattern and are all usually located in /var/log. This is unlike Windows for example where Windows logs are in one place and each application handle logs the way the developer saw fit.

If you take a look in /var/log, you will see something like this:

You can see your boot logs there, dnf which handles package installation and upgrade, firewall logs and so on. Next there are folders with logs for sssd, httpd and others. Main logs are with extension .log and the ones having a date in the extension are rotated logs. Linux keeps moving older logs away in files with extensions like .log-20220414 to keep the recent logs cleaner and easier to read.

Now, of course you can cat your way through these files one by one and see what’s in them, but Linux provides a better tool for checking the logs: journalctl. Let’s see what it does:

If you simply run journalctl without parameters, you will get all logs ordered by timestamp, from the very beginning of log recording. The command will combine all files found in /var/log so you won’t have to run through each by yourself. You get the timestamp, the application and the message. You can see the very first log messages are from the kernel boot.

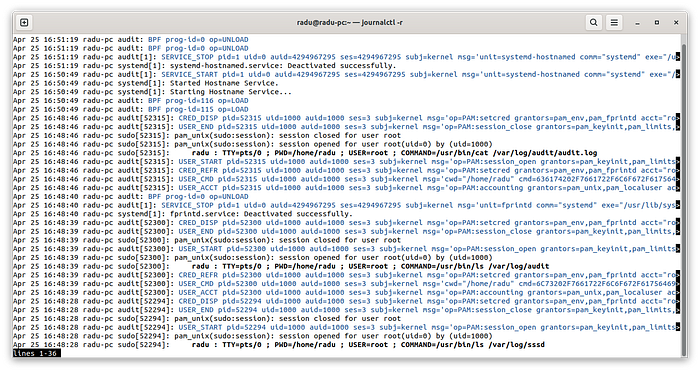

This is great but not very useful because usually we need the latest logs if we want to track something that just happened. To do that, we use the -r parameter:

This is starting to be useful. But lots of logs are missing because if we run journalctl with user privileges, it will usually miss lots of logs. We always run journalctl with sudo.

But there’s another trick. You see logs from the kernel and all applications right now, but you probably want to see logs for a specific application. To do that, we add the -t parameter: sudo journalctl -r -t gdm. This will show us all logs for the gdm application:

Let’s try a more useful filter. By adding the -g parameter we can filter the log by message. We want to see every time this device connected to WiFi:

#sudo journalctl -r -t NetworkManager -g "starting connection"

Each time this device connected to WiFi again is an indication that the system resumed from sleep. Last wake up happened at around 16:37. Let’s see if that is correct:

#sudo journalctl -r -t kernel -g "waking up"

Sure indeed, the first message is a wake up log happening at 16:37.

Checking logs interactively using lnav

Using journalctl is nice, it combines all logs and that makes life a little easier, plus it runs rotated logs too so you don’t have to worry about that. You can also filter everything: all in all it’s a great tool. But if you want to move through the logs interactively, like go through all errors or simply use keys to scan the logs, there’s a better tool for that: lnav. To run it, we use sudo again and we give it the logs folder:

#sudo lnav /var/log

You can see from the screenshot a few things. First of all, you get the very last line with some nice statistics: the error rate and when was the last log recorded. Next, what you see here updates in real time. So if an application writes a log, you will see it here automatically.

Next there is the log file indicator: you can see to the right some colored lines. These indicate that a group of logs come from the same log file. At the top of the output you see a gray bar showing the log date at the beginning and the log file to the right. So you can scroll down to the purple line for example and see in the gray bar that the log file the messages from the purple group belong to is the dnf log:

Now, lnav has very powerful navigation options. If you press e and shift+e you will go to the next or to the previous error recorded. Here is the output after I pressed shift+e to go to the previous (in this case last) error recorded:

It’s the first one in the list. And we can see in the top gray bar that it comes from the dnf log. If we go up a bit, we see what was going on:

Looks like I was trying to remove some packages. If we go up still, we can get to the command that initiated it all:

There it is, in the first row: dnf autoremove. So what did I do? I ran sudo dnf autoremove, I looked then at the list of packages that dnf wants to remove and then I aborted the operation.

In the same way you can use w and shift+w to go through warnings. Using lnav makes it a lot more convenient, especially when you are not sure what you are looking for. You can easily scan errors and warnings and see what was happening with your machine.

The graphical way

Of course, there will always be Gnome Logs. This is a simple way to check logs especially when you have Gnome installed, but keep in mind the console tools too because surely your home server won’t run Gnome.

Gnome Logs makes it easy to read the logs especially since it helps you filter them by category: applications, system, security and hardware. But again, this won’t work on your home server.

How to write a log entry

Linux makes it easy to write log entries by using logger. This is very useful if you write bash scripts and you want to log your activity. Logger allows you to specify the log ID, the one we filtered with journalctl -t, so you know what to look for in the future:

#logger -i test-script "Hello, this is a log entry"

#journalctl -r

You can see the PID 53351 that produced the log, it was a terminal by user radu and then the ID we provided: test-script. Writing logs this way, using the system logs is the preferred way to document things in Linux. You should not use your own files scattered around the system writing logs. They should all be placed in the log folder and you should use logger to write to them. This ensures that journalctl and other tools can read them, that they are all placed in the standard /var/log location and that the entries follow the standard Linux logging format. This is very important if you want these logs to help you in the future.

If you write a Rust application and want to use logging, again you should use the Linux logging standard. For Rust you can use the log and syslog crates in the application Cargo.toml file:

[package]

name = "news"

version = "0.9.0"

authors = ["Radu Zaharia <raduzaharia@outlook.com>"]

edition = "2021"[dependencies]

log = "*"

syslog = "*"

And then you configure logging in your main.rs:

use log::LevelFilter;

use syslog::{BasicLogger, Facility, Formatter3164};async fn main() -> std::io::Result<()> {

let formatter = Formatter3164 {

facility: Facility::LOG_USER,

hostname: None,

process: "news".into(),

pid: 0,

};

let logger = syslog::unix(formatter).unwrap();

log::set_boxed_logger(Box::new(BasicLogger::new(logger)))

.map(|()| log::set_max_level(LevelFilter::Info))

.unwrap();

}

Note the process name which needs to be according to your application name. Then you can write errors in the Linux logs using the error! or warning! or info! macro:

error!("This is an error message!");Again, this will ensure that you are writing logs according to the Linux system logs standards and will help you make sense of the records when using standard tools like journalctl.

That’s all about logging. Understanding the Linux logs takes us a step closer to what we really want: automating tasks based on log entries. We can do that with fail2ban but that’s for another article. Until then, I hope you enjoyed the article and see you next time!